Unlocking Insights: The Imperative of Structured Data Sets from Unstructured Sources

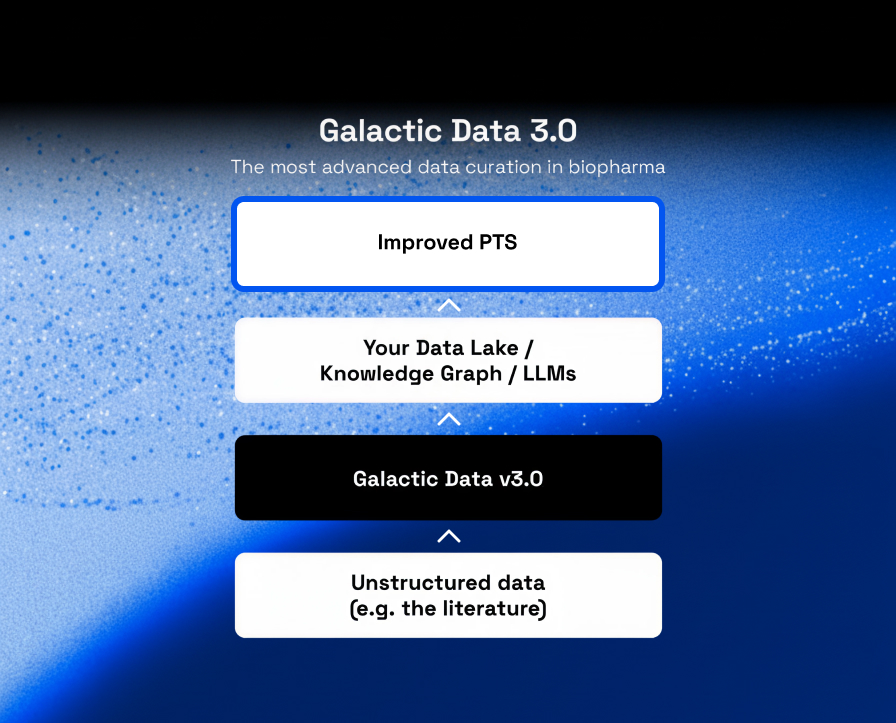

For AI to realise its promise in drug discovery, it’s imperative that we leverage the prior knowledge from decades of scientific and medical research. The scientific literature, clinical trial reports, patents and other text based resources are treasure troves of information, but their unstructured nature presents a formidable challenge to effectively leverage their content for new analysis. Historically, the curation of databases from the literature has been conducted either manually or through natural language processing (NLP), each approach with its own limitations. However, the advent of large language models (LLMs) introduces a paradigm shift, offering the potential for both human-level quality and machine-level breadth.

The Need for Structured Data Sets

The biomedical literature as it stands today contains 46 million articles and grows at approximately 2 million new articles every year. Contained within it is every biomedical discovery that humans have ever thought significant enough to commit to paper and share with each other. The prior knowledge that this embodies is the result of decades and (conservatively) trillions of dollars worth of research.

Against this backdrop, the rise of data-hungry AI approaches is forcing us to leverage every data point at our disposal. The LLM-based chatbots have provided excellent Q&A style search interfaces to this data, but of course are prone to hallucination making the results difficult to interpret in a rigorous scientific context.

The chatbots are also not very good at solving key tasks within biomedical science, for example predicting a patient's survival to a new drug and identifying key characteristics of those that respond. To perform this kind of work we need to train models on defined, well organised datasets. It is therefore critical that we can extract & structure the prior knowledge locked away in the scientific literature to inform our current modelling efforts.

Manual curation of databases from the literature has traditionally been the gold standard to deliver quality structured databases. However, this approach is time-consuming, labor-intensive, and limited in throughput. It is simply not feasible to manually curate large-scale data sets from the vast corpus of scientific literature.

NLP, on the other hand, offers the potential for high throughput and scalability. However, traditional NLP models have struggled to achieve human-level accuracy in extracting and structuring information from complex scientific texts. This has limited their practical utility in many real-world applications.

The Promise of LLMs

LLMs represent a transformative new paradigm in NLP, which is distinct from their use as chatbots. These models are trained on massive amounts of textual data and have demonstrated an unprecedented ability to understand and generate human language. LLMs have shown promising results in various tasks related to the extraction and structuring of information from scientific literature. The greater understanding of text means these models are not limited to within sentence inference, but can infer semantic meaning across larger chunks of texts including paragraphs or whole sections of a manuscript.

One key advantage of LLMs is their ability to learn from and adapt to new data. This means that they can be fine-tuned on specific domains, such as scientific literature or clinical trial reports, to achieve even higher levels of accuracy. Fine-tuning is also essential to drive performance and ensure the output from this fundamentally discriminative (in other words, not generative) task corresponds to the input.

Additionally, LLMs can be used to automate many of the time-consuming tasks involved in data curation, such as data cleaning and normalisation. A virtuous cycle of curation can be achieved, by first bootstrapping with hand-curated data, and then using this to train a model which pre-curates new documents. The next generation of documents then start with (mostly) good annotations which the curators correct and add to. The model is then further fine-tuned and so, enables the curators to work faster and faster, and the model to improve rapidly.

Finally, a major advantage of using LLMs compared to traditional NLP lies in the flexibility they afford for using new ontologies. With traditional NLP, the ontology (a controlled, structured vocabulary describing a domain of science e.g. the Gene Ontology) is used to define what the algorithms search for in the text. In essence, this limits the power of the approach to the quality and completeness of the ontology. LLMs on the other hand, extract all information from the text and the ontology is used only at the very end of the process to structure the resulting output. Ontologies therefore remain a critical component of an LLM-based workflow but can be much more flexibly swapped in and out depending on the field of study.

Conclusion

Manual curation and traditional NLP have their limitations. However, the emergence of LLMs offers a new paradigm, with the potential to overcome these. By combining human-level quality with machine-level breadth, LLMs could revolutionize the curation of structured data sets from unstructured sources. Effectively leveraging prior-knowledge from the literature will improve understanding of disease mechanisms, drug targets and clinical response to therapy.

Latest News

Discover new insights and updates for data science in biopharma